Note: I ran out of time weeks ago. I could never finish this serie as I envisionned, and I don’t see much free time on the horizon. Instead of letting this linger forever, here is a truncated conclusion. The previous episodes were :

- Part 1 : probably the best episode, about the basic game types.

- Part 2 : definition of the game rules in an unspecified monad.

- Part 3 : writing an interpreter for the rules.

- Part 4 : stumbling and failure in writing a clean backend system.

In the previous episode I added a ton of STM code and helper functions in several 15

minutes sessions. The result was not pretty, and left me dissatisfied.

For this episode, I decided to release my constraints. For now, I am only going to support the following :

- The backend list will not be dynamic : a bunch of backends are going to be registered once, and it will be not be possible to remove an existing or add a previous backend once this is done.

- The backends will be text-line based (XMPP and IRC are good protocols for this). This will unfortunately make it harder to write a nice web interface for the game too, but given how much time I can devote to this side-project this doesn’t matter much …

The MVC paradigm

A great man once said that “if you have category theory, everything looks like a pipe. Or a monad. Or a traversal. Or perhaps it’s a cosomething”. With the previously mentionned restrictions, I was able to shoehorn my problem in the shape of the mvc package, which I wanted to try for a while. It might be a bit different that what people usually expect when talking about the model - view - controller pattern, and is basically :

- Some kind of pollable input (the controllers),

- a pure stream based computation (the model), sporting an internal state and transforming the data coming from the inputs into something that is passed to …

- … IO functions that run the actual effects (the views).

Each of these components can be reasoned about separately, and combined together in various ways.

There is however one obvious problem with this pattern, due to the way the game is modeled. Currently, the game is supposed to be able to receive data from the players, and to send data to them. It would need to live entirely in the model for this to work as expected, but the way it is currently written doesn’t make it obvious.

It might be possible to have the game be explicitely CPS, so that the pure part would run the game until communication with the players is required, which would translate nicely in an output that could be consumed by a view.

This would however require some refactoring and a lot of thinking, which I currently don’t have time for, so here is instead how the information flows :

Here PInput and GInput are the type of the inputs (respectively from player and games). The blue boxes are two models that will be combined together. The

pink ones are the type of outputs emitted from the models. The backends serve as drivers for player communication. The games run in their respective

threads, and the game manager spawns and manages the game threads.

Comparison with the “bunch of STM functions” model

I originally started with a global TVar containing the state information of each players (for example if they are part of a game, still joining, due to answer

to a game query, etc.). There were a bunch of “helper functions” that would manipulate the global state in a way that would ensure its consistency. The catch is

that the backends were responsible for calling these helper functions at appropriate times and for not messing with the global state.

The MVC pattern forces the structure of your program. In my particular case, it means a trick is necessary to integrate it with the current game logic (that will be explained later). The “boSf” pattern is more flexible, but carries a higher cognitive cost.

With the “boSf” pattern, response to player inputs could be :

- Messages to players, which fits well with the model, as it happened over STM channels, so the whole processing / state manipulation / player output could

be of type

Input -> STM (). - Spawning a game. This time we need

forkIOand state manipulation. This means a type likec :: Input -> STM (IO ()), with a call likejoin (atomically (c input)).

Now there are helper functions that return an IO action, and some that don’t. When some functionnality is added, some functions need to start returning IO actions. This is ugly and makes it harder to extend.

Conclusion of the serie

Unfortunately I ran out of time for working on this serie a few weeks ago. The code is out, the game works and it’s fun. My original motivation for writing this post was as an exposure on basic type-directed design to my non-Haskeller friends, but I think it’s not approachable to non Haskellers, so I never shown them.

The main takeaways are :

Game rules

The game rules have first been written with an unspecified monad that exposed several functions required for user interaction. That’s the reason I started with defining a typeclass, that way I wouldn’t have to worry about implementing the “hard” part and could concentrate on writing the rules instead. For me, this was the fun part, and it was also the quickest.

As of the implementation of the aforementionned functions, I then used the operational package, that would let me

write and “interpreter” for my game rules. One of them is pure, and used in tests. There are two other interpreters, one

of them for the console version of the game, the other for the multi-backends system.

Backend system

The backends are, I think, easy to expand. Building the core of the multi-game logic with the mvc package very

straightforward. It would be obvious to add an IRC backend to the XMPP one, if there weren’t that many IRC packages to

choose from on hackage …

A web backend doesn’t seem terribly complicated to write, until you want to take into account some common web application constraints, such as having several redundant servers. In order to do so, the game interpreter should be explicitely turned into an explicit continuation-like system (with the twist it only returns on blocking calls) and the game state serialized in a shared storage system.

Bugs

My main motivation was to show it was possible to eliminate tons of bug classes by encoding of the invariants in the type system. I would say this was a success.

The area where I expected to have a ton of problems was the card list. It’s a tedious manual process, but some tests weeded out most of the errors (it helps that there are some properties that can be verified on the deck). The other one was the XMPP message processing in its XML horror. It looks terrible.

The area where I wanted this process to work well was a success. I wrote the game rules in one go, without any feedback. Once they were completed, I wrote the backends and tested the game. It turned out they were very few bugs, especially when considering the fact that the game is a moderately complicated board game :

- One of the special capabilities was replaced with another, and handled at the wrong moment in the game. This was quickly debugged.

- I used

traverseinstead ofbothfor tuples. I expected them to have the same result, and it “typechecked” because my tuple was of type(a,a), but theApplicativeinstance for tuples made it obvious this wasn’t the case. That took a bit longer to find out, as it impacted half of the military victory points, which are distributed only three times per game. - I didn’t listen to my own advice, and didn’t take the time to properly encode that some functions only worked with nonempty lists as arguments. This was also quickly found out, using quickcheck.

The game seems to run fine now. There is a minor rule bugs identified (the interaction between card-recycling abilities and the last turn for example), but I don’t have time to fix it.

There might be some interest with the types of the Hub, as they also encode a lot of invariants.

Also off-topic, but I really like using the lens vocabulary to encode the relationship between types these days. A trivial example can be found here.

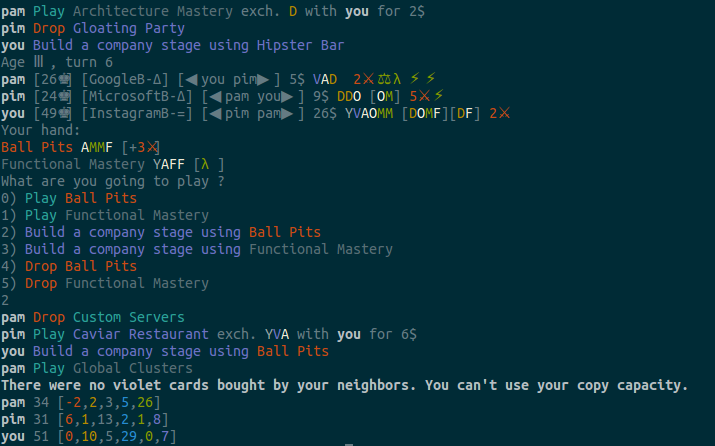

The game

That might be the most important part. I played a score of games, and it was a lot of fun. The game is playable, and just requires a valid account on an XMPP server. Have fun !