I finally uploaded a new version of language-puppet to Hackage. Here is a selection of the new features since 0.9.0:

- A complete rewrite of the PuppetDB subsystem, which is the focus of this article.

- A new

pdbQuerybinary that can query one of the new PuppetDB backends, but also dump the content of a real PuppetDB into something that can be consumed by the new TestDB backend. I will probably blog more about this feature in subsequent posts. - An actual command line parser for the

language-puppetbinary. - Very tentative new testing module, that is more or less hspec package in a

ReaderT FinalCatalog. This will require more work, and a dedicated post. - A limited support for the new lambda methods of the future parser. The limitation is intentional, and will be described in more details in another post.

The main change about PuppetDB support is from an API point of view. It isn’t a single function with a terribly vague type anymore (T.Text -> Value -> IO (S.Either String Value)).

It is now a set of functions, one for each supported command and endpoint, with a specific query type that can only define valid queries. This makes it easier to use a PuppetDB backend programatically or to give better error messages to the user of functions such as pdbresourcequery.

Behind this API are now three backends :

- The dummy backend, which as its name implies, is just a stub that will return empty answers.

- The remote backend, that connects to a real PuppetDB server.

- The TestDB backend, brand new, not really tested and the focus of our article.

This backend is a limited re-implementation of the features offered by a full fledged PuppetDB server, with a focus on interactive development of manifests and modules. Instead of describing this in details, I will describe a (perfectly artificial) scenario where it comes handy. In this scenario we have two backend servers, and a proxy. We would like to use exported resources so that the backends are automatically registered.

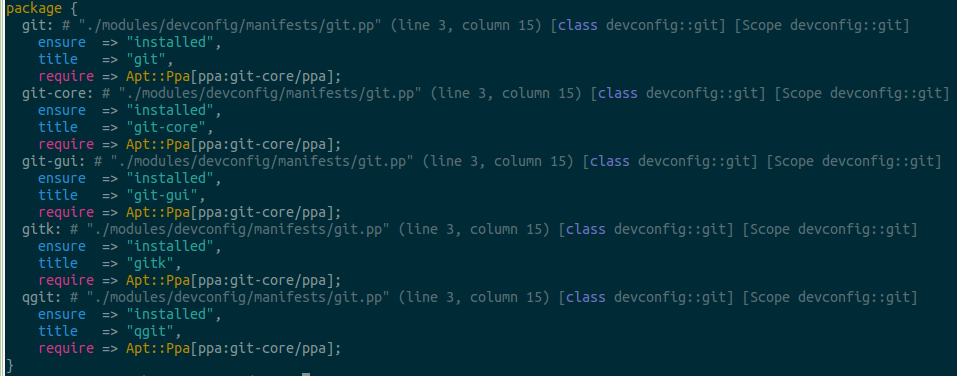

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | |

If we run our puppetresources with a new command line option, here is what happens:

1 2 3 4 5 6 7 8 9 10 11 | |

There is a scary warning, and then the usual output of the puppetresources tool. We can see that Haproxy::Backend[back1] is an exported resource, and is not applied on the current node. Here is the content of the newly generated file :

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | |

This is a YAML representation of the catalog wire format. You can easily edit this file to test arbitrary conditions, or just inspect it. You can also include arbitrary facts to manually test for edge cases.

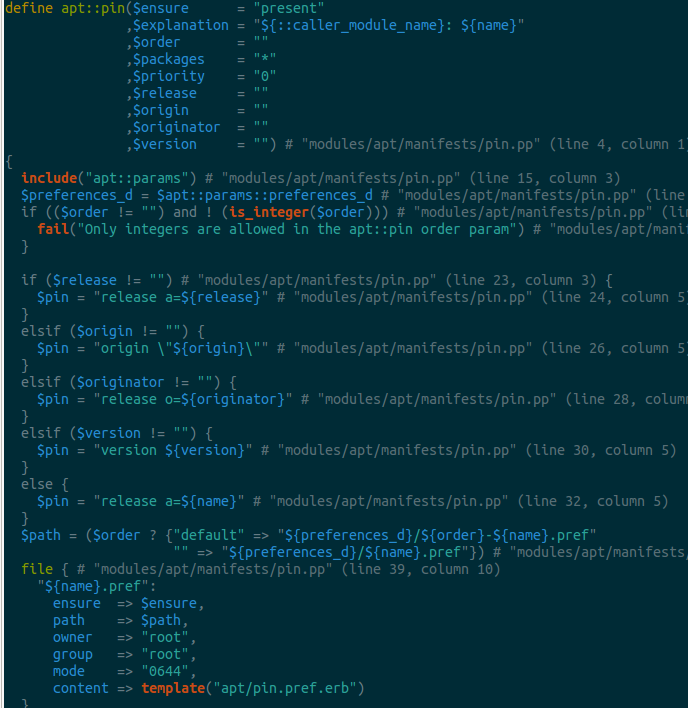

The frontend server includes the haproxy module, which looks like this :

1 2 3 4 5 6 7 | |

Now if we run the puppetresources binary using the generated PuppetDB file, we get:

1 2 3 4 5 6 7 8 9 10 11 12 | |

You can notice the new command line flags. The -n (resource name) and -t (resource types) accept regular expressions to filter resources. When used in conjunction with -c, the resource name must be the exact title of a file, and its content field will be displayed verbatim :

1 2 3 4 5 | |

This new feature will let you experiment with exported resources in a very natural way, without having to ever compute in-development manifest files on a live Puppet master.